Logging with pino.js - log to file, http and even email

January 13 2153 Words

Table of Contents

What's pino.js?

I have heard that pino.js is one of the best logging libraries for node.js, so I just checked their GitHub repo, it's:

- Popular. It has 12.7k stars on GitHub.

- Actively maintained. The latest commit was just a few hours ago.

- Well documented. It's easy to read and to get what we need from the doc.

But first, what's pino.js? Let's check the GitHub description first:

🌲 super fast, all natural json logger

A fast json logger? That is what I might need. Just like the description, their benchmarks seem to be reliable:

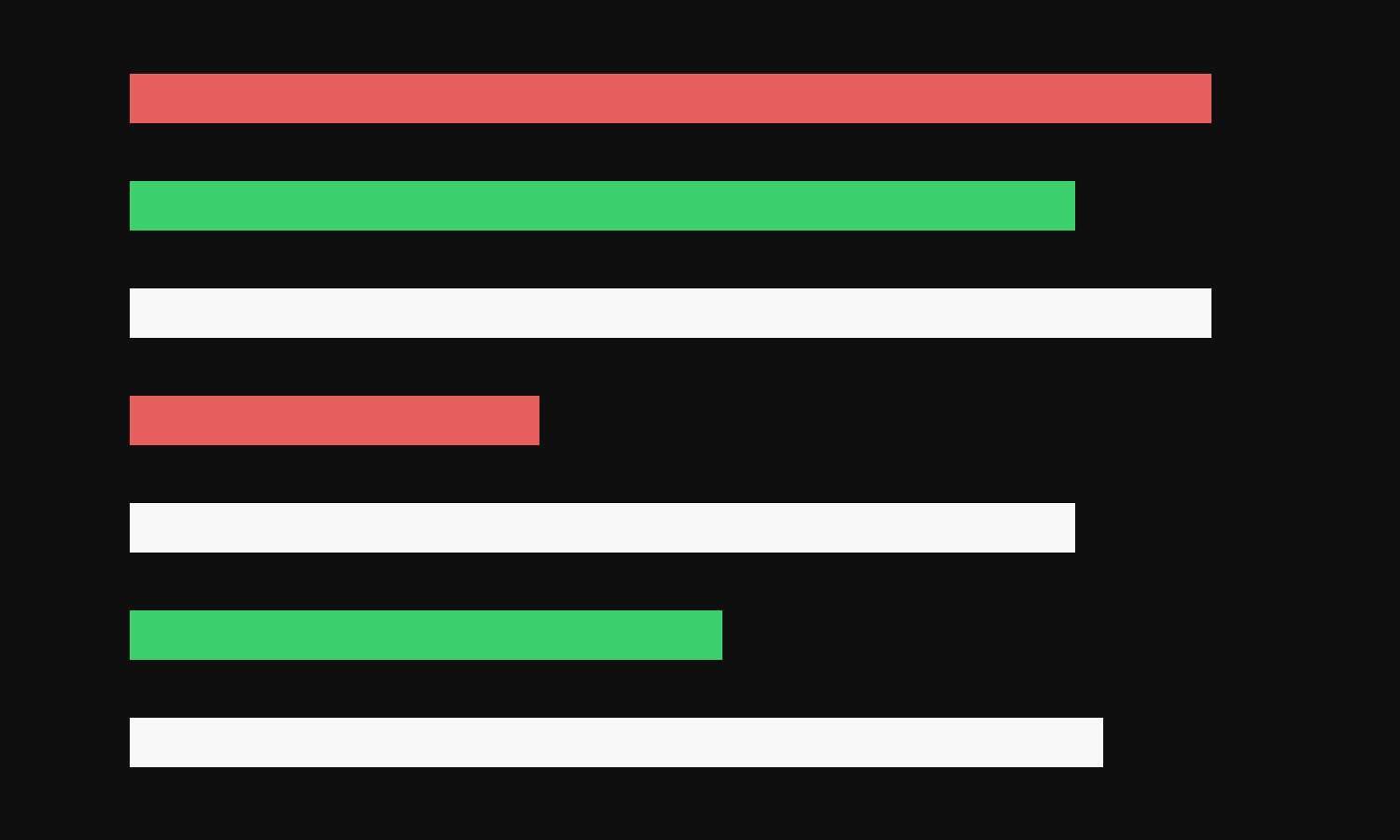

BASIC benchmark averages

Bunyan average: 377.434ms

Winston average: 270.249ms

Bole average: 172.690ms

Debug average: 220.527ms

LogLevel average: 222.802ms

Pino average: 114.801ms

PinoMinLength average: 70.968ms

PinoNodeStream average: 159.192ms

Cool, but what really amazed me was its Transports API:

Pino transports can be used for both transmitting and transforming log output.

With the transports API, we can easily send our logs anywhere: stdout, file, cloud logging service, email, etc. Every transport will be executed in a separate worker thread, which means the performance impact of logging can be very slow.

Seems very powerful, let's start our logging with pino.js:

pnpm add pino

javascript

index.mjs

import pino from "pino";

const logger = pino();

logger.info("Show me");

A JSON log will print to our terminal:

{"level":30,"time":1705230235082,"pid":94,"hostname":"DESKTOP-B2KN7CC","msg":"Show me"}

Prebuilt transports

As mentioned before, the Transports AP is powerful if we want to log everywhere, but we can also use pino.js without a custom transport, there are some prebuilt transports we can use for basic use cases.

Pretty transport

pino-pretty can format the default JSON logs, this gives us much more readable outputs.

Let's try:

pnpm add pino-pretty

javascript

index.mjs

import pino from "pino";

const logger = pino({

transport: {

target: "pino-pretty",

},

});

logger.info("Show me");

Above code, we have introduced a transport, pino-pretty is the target of our transport, which writes logs through a custom transport exported by the pino-pretty package.

The new log line includes the timestamp, level, pid, and message, and it's colorized by default.

[19:08:16.720] INFO (120): Show me

Much more readable!

File transports

pino also has a built-in transport called pino/file, which will write our log to std streams or files:

javascript

index.mjs

const logger = pino({

transport: {

target: "pino/file",

options: {

// 1 to STDOUT

// 2 to STDERR

// string to file

destination: "./app.log",

},

},

});

The above code will write our logs to a file called app.log:

plaintext

app.log

{"level":30,"time":1705232522784,"pid":159,"hostname":"DESKTOP-A6LC7MM","msg":"Show me"}

pino-pretty supports a file destination too, we can write readable logs to files:

javascript

index.mjs

const logger = pino({

transport: {

target: "pino-pretty",

options: {

destination: "./app.log",

// We need to turn colorize off to get plain text logs.

colorize: false,

},

},

});

Custom transports

We may need more advanced logging use cases, such as writing logs to a file and sending the logs to some cloud logging service.

This is where custom transports come in, and they are very useful in these use cases.

To use a custom transport, we need to create a separate transport js first:

javascript

my-transport.mjs

// Our implementation

And then set the file path as our transport target:

javascript

index.mjs

const logger = pino({

transport: {

target: "my-transport.mjs",

},

});

Rotating file transport

Let's say, we need log rotation for our logging. It writes logs into separate log files, these files are named by the logging date so we can easily check them by dates.

The destination of pino/file and pino-pretty transport is static, so we can't use them for log rotation.

But implementing the rotation logic in the transport? I would not because there are many file stream rotation libraries here, all we need to do is write logs to the rotating stream.

In the sample below, we use rotating-file-stream library and pino-abstract-transport to write our transport.

Let's add dependencies:

pnpm add pino-abstract-transport rotating-file-stream

Then implement the transport:

javascript

pino-transport-rotating-file.mjs

import build from "pino-abstract-transport";

import * as rfs from "rotating-file-stream";

import * as path from "path";

export default async function (options = { dir }) {

if (options.dir == null) {

throw new Error("Missing required option: dir");

}

const pad = (num) => (num > 9 ? "" : "0") + num;

// The log filename generator

const generator = (time, index) => {

if (!time) return path.join(options.dir, "app.log");

const date =

time.getFullYear() +

"-" +

pad(time.getMonth() + 1) +

"-" +

pad(time.getDate());

const filename = `${date}.${index}.log`;

return path.join(options.dir, filename);

};

const rotatingStream = rfs.createStream(generator, {

size: "10M", // rotate every 10 MegaBytes written

interval: "1d", // rotate daily

compress: "gzip", // compress rotated files

immutable: true,

});

return build(

(source) => {

// Stream our logs to the rotating stream.

source.pipe(rotatingStream);

return rotatingStream;

},

{

parse: "lines",

// Close is needed to flush the stream, otherwise the logs will be lost.

async close() {

await new Promise((resolve) => {

rotatingStream.end(() => resolve());

});

},

}

);

}

Let's use it in our index.mjs:

javascript

import pino from "pino";

import * as path from "path";

const logger = pino({

transport: {

target: "./pino-transport-rotating-file.mjs",

options: { dir: path.join(process.cwd(), "logs") },

},

});

logger.info("Show me");

If everything goes fine, we will get this log file:

plaintext

./logs/2024-01-14.1.log

{"level":30,"time":1705238823829,"pid":522,"hostname":"DESKTOP-A6LC7MM","msg":"Show me"}

Cool! How about pretty logging for these files?

We can use a stream Transform and the prettyFactory from pino-pretty:

javascript

pino-transport-rotating-file.mjs

...

import { prettyFactory } from "pino-pretty";

import { Transform, pipeline } from "stream";

export default async function () {

...

return build(

(source) => {

const pretty = prettyFactory({ colorize: false });

const prettyStream = new Transform({

objectMode: true,

autoDestroy: true,

transform: (chunk, encoding, callback) => {

callback(null, pretty(chunk.toString()));

},

});

pipeline(source, prettyStream, rotatingStream, (err) => {

if (err) {

console.error("Failed to write log in transport:", err);

}

});

return prettyStream;

},

...

);

}

Finally, our logs become readable again:

plaintext

./logs/2024-01-14.1.log

[21:39:23.876] INFO (576): Show me

HTTP transports

We can also write our logs to a cloud logging service over fetch() in the transport. This is an example of sending logs via Grafana Loki HTTP API.

javascript

pino-transport-loki.mjs

import build from "pino-abstract-transport";

export default async function (options = { baseUrl, username, apiToken }) {

/**

* @type {Array<Promise>} Send tasks.

*/

const tasks = [];

return build(

async (source) => {

// We use an async iterator to read log lines.

for await (let line of source) {

const task = sendToLoki(

options.baseUrl,

options.username,

options.apiToken,

line

);

tasks.push(task);

}

return source;

},

{

parse: "lines",

async close() {

// Wait for all send tasks to complete.

await Promise.all(tasks);

},

}

);

}

function sendToLoki(baseUrl, username, apiToken, line) {

const logData = JSON.parse(line);

const logItems = [[(logData.time * 1000000).toString(), logData.msg]];

const body = {

streams: [

{

stream: {

label: "testing",

},

values: logItems,

},

],

};

return fetch(baseUrl + "/loki/api/v1/push", {

method: "POST",

headers: {

"Content-Type": "application/json",

Authorization:

"Basic " + Buffer.from(username + ":" + apiToken).toString("base64"),

},

body: JSON.stringify(body),

});

}

Let's log in to our Grafana Dashboard, if it works fine, we will get the log on our Loki dashboard:

2024-01-15 09:22:23.054 Show me

Email transport

Like the HTTP transport, we can also set up email alerts for our logging. We use the nodemailer package to send emails.

Let's write our transport:

javascript

pino-transport-email.mjs

import build from "pino-abstract-transport";

import { prettyFactory } from "pino-pretty";

import nodemailer from "nodemailer";

export default async function (

options = { smtpHost, smtpPort, smtpUser, smtpPass, sendTo }

) {

const { smtpHost, smtpPort, smtpUser, smtpPass, sendTo } = options;

const emailTransporter = nodemailer.createTransport({

host: smtpHost,

port: smtpPort,

secure: true,

auth: {

user: smtpUser,

pass: smtpPass,

},

});

/**

* @type {Array<Promise>} Send tasks.

*/

const tasks = [];

return build(

async (source) => {

const pretty = prettyFactory({ colorize: false });

// We use async iterator to read log lines.

for await (let line of source) {

const task = emailTransporter

.sendMail({

from: smtpUser,

to: sendTo,

subject: "SomeApp Logging Alert",

text: `Your app has logged an alert:\n${pretty(line)}.`,

})

.then((info) => console.log(info));

tasks.push(task);

}

return source;

},

{

parse: "lines",

async close() {

// Wait for all send tasks to complete.

await Promise.all(tasks);

},

}

);

}

And add it to our targets:

javascript

index.mjs

const logger = pino({

transport: {

targets: [

...,

{

target: "./pino-transport-email.mjs",

options: {

smtpHost: "smtp.gmail.com",

smtpPort: 465,

smtpUser: "[email protected]",

smtpPass: "GOOGLE_APP_PASSWORD",

sendTo: "TARGET_ADDRESS",

},

},

],

},

});

If everything goes fine, we will get an email from our logging service:

Your app has logged an alert:

[10:12:24.635] INFO (502): Show me

Transport levels

We have created three custom transports for our logging service:

- File transport, log everything to the rotating file stream.

- HTTP transport, log everything to our Loki endpoint.

- Email transport, send an alert email for every logging.

Our logging service is doing too much and we don't want an alert email for every logging. In this case, we can use the level option to filter the logs that come to our transport.

javascript

index.mjs

const logger = pino({

transport: {

targets: [

{

target: "pino-pretty",

options: { destination: 1 },

},

{

target: "./pino-transport-rotating-file.mjs",

level: "warn",

options: { ... },

},

{

target: "./pino-transport-loki.mjs",

level: "error",

options: { ... },

},

{

target: "./pino-transport-email.mjs",

level: "fatal",

options: { ... },

},

],

},

});

// >> stdout

logger.info("I'm good.");

// >> stdout and file

logger.warn("Something may not be right.");

// >> stdout, file, and loki

logger.error("Something goes wrong.");

// >> Every transport will receive this log

logger.fatal("Something really bad just happened.");

Don't log (too much)

We built an interesting and strong logging service based on pino.js that can send our logs anywhere. But I have to say:

In most cases, you probably don't need these.

Even if pino.js has great performance and can write logs asynchronously in separate worker threads, it still consumes resources.

The file transport is fast but could have a noticeable overhead when logging too much, the HTTP transport takes time to send a request, and the email transport even takes more time. These I/O will surely slow down our computer.

If you are sure that your App works fine, you don't need logs.

Conclusion

With custom transports in pino.js, whether for rotating file streams, cloud logging, or even email alerts, they can be integrated quickly. In node.js, pino.js is a really good choice for logging.

That's all of this blog.

Happy logging! but don't log too much.

You can find all source code in this GitHub repo.